Image Analysis and Data Fusion (IADF)

MISSION

- Organization

- News

- Activities

- Working Groups

- Data Fusion Contest

- Past Data Fusion Contests

- Members

- Contact

The TC is comprised of 3 working groups dedicated to distinct fields within the scope of image analysis and data fusion, namely WG-MIA (Machine/Deep Learning for Image Analysis), WG-ISP (Image and Signal Processing), and WG-BEN (Benchmarking).

Organization

The IADF Technical Committee encourages participation from all its members. The committee organization includes the Chair, two Co-Chairs, and three working groups led by working group leads.

IADF Technical Committee Chair

| Prof. Claudio Persello University of Twente The Netherlands |

IADF Technical Committee Co-Chair

| Dr. Gemine Vivone National Research Council Italy |

| Dr. Saurabh Prasad |

Working Group Leads

WG on Machine/Deep Learning for Image Analysis (WG-MIA)

WG-MIA Lead

| Dr. Dalton Lunga Oak Ridge National Laboratory USA |

WG-MIA Co-Lead

| Dr. Ujjwal Verma |

| Dr. Silvia Ullo |

| Dr. Ronny Hänsch |

| Prof. Danfeng Hong Chinese Academy of Sciences China |

WG on Image and Signal Processing (WG-ISP)

WG-ISP Lead

| Dr. Gülşen Taşkın Istanbul Technical University (VITO) Turkey |

WG-ISP Co-Lead

| Dr. Stefan Auer |

| Dr. Loic Landrieu LASTIG, IGN/ENSG, UGE, France |

| Dr. Lexie Yang Oak Ridge National Laboratory USA |

WG on Benchmarking (WG-BEN)

WG-BEN Lead

| Prof. Xian Sun Chinese Academy of Sciences China |

WG-BEN Co-Lead

| Seyed Ali Ahmadi K. N. Toosi University of Technology Iran |

| Dr. Francescopaolo Sica |

| Dr. Yonghao Xu |

| Valerio Marsocci |

News

Registration is now open for GRSS IADF School on Computer Vision for Earth Observation

We are thrilled to announce that registration for the 3rd Edition of the GRSS IADF School on Computer Vision for Earth Observation is now open. This highly anticipated event will take place at the University of Sannio in Benevento, Italy, from 11th to 13th September 2024. This school will contain a series of lectures on the existing methods utilized for analyzing satellite images, along with the challenges encountered. Each lecture will be followed by a practical session where the participants will go deep into the details of the techniques discussed in the lecture using some commonly used programming languages (e.g., Python) and open-source software tools.

Application Closes 31st May 2024.

More Details: iadf-school.org/

The EarthVision 2024 workshop will take place at the Computer Vision and Pattern Recognition (CVPR) 2024 Conference. We have awesome keynote speakers and a challenging contest! Don’t miss out on the latest advancements in Computer Vision and AI / ML for Remote Sensing and Earth Observation. Find out more about Earth Vision 2024.

Community Contributed Sessions at IGARSS 2024: IADF TC is organizing the following Community Contributed Sessions at IGARSS 2024, More information : www.2024.ieeeigarss.org/community_contributed_sessions.php

- Title: Image Analysis and Data Fusion: The AI Era

Organized by: IADF Chairs - Title: IEEE GRSS Data Fusion Contest – Track 1, Track 2

Organized by: IADF Chairs - Title: Datasets and Benchmarking in Remote Sensing: Towards Large-Scale, Multi-Modality and Sustainability

Organized by: WG-BEN - Title: Sustainable Development Goals through Image Analysis and Data Fusion of Earth Observation Data

Organized by: WG-MIA - Title: Advances in Multimodal Remote Sensing Image Processing and Interpretation

Organized by: WG-ISP

Machine Learning in Remote Sensing – Theory and Applications for Earth Observation Tutorial at IGARSS 2024

Workshop at ICLR 2024: Machine Learning for Remote Sensing ml-for-rs.github.io/iclr2024/

| TC NewsletterThe committee distributes an e-mail newsletter to all committee members on a monthly basis regarding recent advancements, datasets, and opportunities. If you are interested in receiving the newsletter, please join the TC. We would highly appreciate your input if you want to let us know about upcoming conference/workshop/journal deadlines, new datasets or challenges, or vacant positions in remote sensing and earth observation. |

| Contests and ChallengesCurrentPast |

| EOD: The Earth Observation DatabaseEOD provides an interactive and searchable catalog of public benchmark datasets for remote sensing and earth observation with the aim to support researchers in the fields of geoscience, remote sensing, and machine learning. |

| IADF SchoolThe IADF School focuses on applying CV/ML methods to address challenges in remote sensing and contains a series of lectures on the existing methods utilized for analyzing satellite images, along with the challenges encountered. |

| WorkshopsCurrent

Past

|

| Special / Invited SessionsCurrent Community-Contributed Sessions

Past

|

| TutorialsMachine Learning in Remote Sensing – Theory and Applications for Earth Observation at IGARSS 2024 |

| Special Issues / StreamsCurrent Past

|

| Webinars

|

| Papers

IGARSS Papers

DevelopingGeoAI: Best Practices and Design Considerations |

| Data and Algorithm Standard Evaluation (DASE)The GRSS Data and Algorithm Standard Evaluation (DASE) website provides data sets and algorithm evaluation standards to support research, development, and testing of algorithms for remote sensing data analysis (e.g., machine/deep learning, image/signal processing). |

Working Groups

To encourage the active participation of all TC members, the IADF organization comprises, in addition to the 3 Technical Committee Co-Chairs, 3 working groups (WGs). These working groups focus on Machine/Deep Learning for Image Analysis (MIA), Image and Signal Processing (ISP), and Benchmarking (BEN). Each WG will address a specific topic, will provide input and feedback to the TC chairs, organize topic-related events (such as workshops, contests, tutorials, invited sessions, etc.). Please find the corresponding WG and their thematic scope below. If you feel that certain research or applicational areas are within the scope of IADF but not well represented, feel free to propose additional WGs.

WG on Machine/Deep Learning for Image Analysis (WG-MIA)

The WG-MIA fosters theoretical and practical advancements in Machine Learning and Deep Learning (ML/DL) for the analysis of geospatial and remotely sensed images. Under the umbrella of the IADF TC, WG-MIA serves as a global network that promotes the development of ML/DL techniques and their application in the context of various geospatial domains. It aims at connecting engineers, scientists, teachers, and practitioners, promoting scientific/technical advancements and geospatial applications. To promote the societal impact of ML-based solutions for the analysis of geospatial data, we seek accountability, transparency, and explainability. We encourage the development of ethical, understandable, and trustworthy techniques.

Current Activities: Organization of invited sessions at international conferences and special issues in international journals.

WG on Image and Signal Processing (WG-ISP)

The WG-ISP promotes advances in signal and image processing relying upon the use of remotely sensed data. It serves as a global, multi-disciplinary, network for both data fusion and image analysis supporting activities about several specific topics under the umbrella of the GRSS IADF TC. It aims at connecting people, supporting educational initiatives for both students and professionals, and promoting advances in signal processing for remotely sensed data.

The WG-ISP oversees different topics, such as pansharpening, super-resolution, data fusion, segmentation/clustering, denoising, despeckling, image enhancement, image restoration, and many others.

Current Activities: Organization of invited sessions at international conferences, special issues in international journals, and challenges and contests using remotely sensed data.

WG on Benchmarking (WG-BEN)

Datasets have always been important in methodical remote sensing. They have always been used as a backbone for the development and evaluation of new algorithms. In today’s era of big data and deep learning, datasets have become even more important than before: Large, well-curated, and annotated datasets are of crucial importance for the training and validation of state-of-the-art models for information extraction from increasingly versatile multi-sensor remote sensing data. In addition, due to the increasing number of new methods being proposed by scientists and engineers, the possibility to compare these methods in a fair and transparent manner has become more and more important.

The WG-BEN addresses these challenges and provides input with respect to evaluation methods, datasets, benchmarks, competitions, and tools for the creation of reference data. Furthermore, we contribute to evaluation sites and databases.

Current Activities: Organization of Invited Session on IGARSS, contribution to an online database for datasets (DASE 2.0), showcasing of selected public datasets in the monthly IADF Newsletter.

IEEE GRSS Data Fusion Contest 2026

Dear IADF members,

The 2025 Data Fusion Contest (DFC) was a great success! It’s now time to prepare for next year’s contest. Following the previous years contests, we are excited to extend this community-wide invitation to submit proposals for the 2026 edition of the IEEE GRSS Data Fusion Contest.

If you are interested in co-organizing the contest, please send your proposal to grss-iadf@ieee.org by July 31, 2025. The 2026 Data Fusion Contest topic will be decided by the end of August. In your proposal, please clarify the following points:

- Tentative title of the IEEE GRSS DFC 2026

- Partners

- Overview

- Tasks

Please clarify:

– Novelty in comparison to the existing competitions and datasets

– Existence of data fusion aspects (optional) - Datasets

Please clarify the following:

– Status and schedule: Are the data ready? Does it still need to be acquired? Are reference data available? Was any part of the data already published?

– Is the data under any kind of license?

– Will the data be available after the contest?

– Size of datasets: (tentative) number of images/scenes and (tentative) size in MB

– Example images - Sponsors

– Is there an opportunity to sponsor a prize for the top-ranking teams (cash prize, cloud compute credits, travel cost reimbursement, etc.)

We are looking forward to receiving your proposals.

Best regards,

IADF Chairs

For any information about past Data Fusion Contests, released data, and the related terms and conditions, please email iadf_chairs@grss-ieee.org.

2025 IEEE GRSS Data Fusion Contest

With rapid advances in small Synthetic Aperture Radar (SAR) satellite technology, Earth Observation (EO) now provides submeter-resolution all-weather mapping with increasing temporal resolution. While optical data offer intuitive visuals and fine detail, but are limited by weather and lighting conditions. In contrast, SAR can penetrate cloud cover and provide consistent imagery in adverse weather and nighttime, enabling frequent monitoring of critical areas—valuable when disasters occur or environments rapidly change. Effectively exploiting the complementary properties of SAR and optical data to solve complex remote sensing image analysis problems remains a significant technical challenge.

The 2025 IEEE GRSS Data Fusion Contest, organized by the Image Analysis and Data Fusion Technical Committee, the University of Tokyo, RIKEN, and ETH Zurich aims to foster the development of innovative solutions for all-weather land-cover and building damage mapping using multimodal SAR and optical EO data at submeter resolution. The contest comprises two tracks focusing on land cover types and building damage, respectively, and presents two main technical challenges: effective integration of multimodal data and handling of noisy labels.

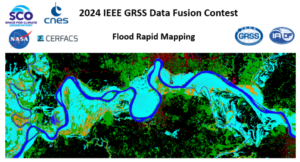

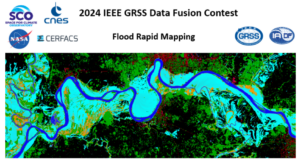

2024 IEEE GRSS Data Fusion Contest

The goal of IEEE GRSS 2024 Data Fusion Contest challenge was to design and develop an algorithm that will combine multi-source data to classify flood surface water extent–that is, water and non-water areas. Provided data sources include optical and Synthetic Aperture Radar (SAR) remote sensing images as well as a digital terrain model, land-use and water occurrence. The content for the two bulleted points (Data, and Contest Results) can be found in the existing data fusion contest tab.

2023 IEEE GRSS Data Fusion Contest

The 2023 IEEE GRSS Data Fusion Contest, organized by the Image Analysis and Data Fusion Technical Committee (IADF TC) of the IEEE Geoscience and Remote Sensing Society (GRSS), the Aerospace Information Research Institute under the Chinese Academy of Sciences, the Universität der Bundeswehr München, and GEOVIS Earth Technology Co., Ltd. aims to push current research on building extraction, classification, and 3D reconstruction towards urban reconstruction with fine-grained semantic information of roof types.

2022 IEEE GRSS Data Fusion Contest

The semi-supervised learning challenge of the 2022 IEEE GRSS Data Fusion Contest aims to promote research in automatic land cover classification from only partially annotated training data consisting of VHR RGB imagery.

2021 IEEE GRSS Data Fusion Contest

The 2021 IEEE GRSS Data Fusion Contest aimed to promote research on geospatial AI for social good. The global objective was to build models for understanding the state and changes of artificial and natural environments from multimodal and multitemporal remote sensing data towards sustainable developments. The 2021 Data Fusion Contest consisted of two challenge tracks: Detection of settlements without electricity and Multitemporal semantic change detection.

2020 IEEE GRSS Data Fusion Contest

The 2020 Data Fusion Contest aimed to promote research in large-scale land cover mapping from globally available multimodal satellite data. The task was to train a machine learning model for global land cover mapping based on weakly annotated samples. The Contest consisted of two challenge tracks: Track 1: Landcover classification with low-resolution labels, and Track 2: Landcover classification with low- and high-resolution labels.

2019 IEEE GRSS Data Fusion Contest

The 2019 Data Fusion Contest aimed to promote research in semantic 3D reconstruction and stereo using machine intelligence and deep learning applied to satellite images. The global objective was to reconstruct both a 3D geometric model and a segmentation of semantic classes for an urban scene. Incidental satellite images, airborne lidar data, and semantic labels were provided to the community.

2018 IEEE GRSS Data Fusion Contest

The 2018 Data Fusion Contest aimed to promote progress on fusion and analysis methodologies for multi-source remote sensing data. It consisted of a classification benchmark, the task to be performed being urban land use and land cover classification. The following advanced multi-source optical remote sensing data are provided to the community: multispectral LiDAR point cloud data (intensity rasters and digital surface models), hyperspectral data, and very high-resolution RGB imagery.

2017 IEEE GRSS Data Fusion Contest

The 2017 IEEE GRSS Data Fusion Contest focused on global land use mapping using open data. Participants were provided with remote sensing (Landsat and Sentinel2) data and vector layers (Open Street Map), as well as a 17 classes ground reference at 100 x 100m resolution over five cities worldwide (Local climate zones, see Stewart and Oke, 2012): Berlin, Hong Kong, Paris, Rome, Sao Paulo. The task was to provide land use maps over four other cities: Amsterdam, Chicago, Madrid, and Xi’an. The maps were to be uploaded on an evaluation server. Please refer to the links below to know more about the challenge, download the data and submit your results (even now that the contest is over).

2016 IEEE GRSS Data Fusion Contest

The 2016 IEEE GRSS Data Fusion Contest, organized by the IADF TC, was opened on January 3, 2016. The submission deadline was April 29, 2016. Participants submitted open topic manuscripts using the VHR and video-from-space data released for the competition. 25 teams worldwide participated to the Contest. Evaluation and ranking were conducted by the Award Committee.

Paper: Mou, L.; Zhu, X.; Vakalopoulou, M.; Karantzalos, K.; Paragios, N.; Le Saux, B.; Moser, G. & Tuia, D., Multi-temporal very high resolution from space: Outcome of the 2016 IEEE GRSS Data Fusion Contest, IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., in press.

2015 IEEE GRSS Data Fusion Contest

The 2015 Contest was focused on multiresolution and multisensor fusion at extremely high spatial resolution. A 5-cm resolution color RGB orthophoto and a LiDAR dataset, for which both the raw 3D point cloud with a density of 65 pts/m² and a digital surface model with a point spacing of 10 cm, were distributed to the community. These data were collected using an airborne platform over the harbor and urban area of Zeebruges, Belgium. The department of Communication, Information, Systems, and Sensors of the Belgian Royal Military Academy acquired and provided the dataset. Participants were supposed to submit original IGARSS-style full papers using these data for the generation of either 2D or 3D thematic mapping products at extremely high spatial resolution.

Paper: M. Campos-Taberner, A. Romero-Soriano, C. Gatta, G. Camps-Valls, A. Lagrange, B. Le Saux, A. Beaupère, A. Boulch, A. Chan-Hon-Tong, S. Herbin, H. Randrianarivo, M. Ferecatu, M. Shimoni, G. Moser, and D. Tuia. Processing of extremely high-resolution LiDAR and RGB data: Outcome of the 2015 IEEE GRSS Data Fusion Contest. Part A: 2D contest. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., 9(12):5547–5559, 2016.

Paper: A.-V. Vo, L. Truong-Hong, D.F. Laefer, D. Tiede, S. d’Oleire Oltmanns, A. Baraldi, M. Shimoni, G. Moser, and D. Tuia. Processing of extremely high-resolution LiDAR and RGB data: Outcome of the 2015 IEEE GRSS Data Fusion Contest. Part B: 3D contest. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., 9(12):5560–5575, 2016.

2014 IEEE GRSS Data Fusion Contest

The 2014 Contest involved two datasets acquired at different spectral ranges and spatial resolutions: a coarser-resolution long-wave infrared (LWIR, thermal infrared) hyperspectral data set and fine-resolution data acquired in the visible (VIS) wavelength range. The former was acquired by an 84-channel imager covering the wavelengths between 7.8 to 11.5 μm with approximately 1-meter spatial resolution. The latter is a series of color images acquired during separate flight-lines with approximately 20-cm spatial resolution. The two data sources cover an urban area near Thetford Mines in Québec, Canada, and were acquired and were provided for the Contest by Telops Inc. (Canada). A ground truth with 7 landcover classes is provided and the mapping is performed at the higher of the two data resolutions.

Paper: W. Liao, X. Huang, F. Van Coillie, S. Gautama, A. Pizurica, W. Philips, H. Liu, T. Zhu, M. Shimoni, G. Moser, D. Tuia. Processing of Multiresolution Thermal Hyperspectral and Digital Color Data: Outcome of the 2014 IEEE GRSS DataFusion Contest. IEEE J. Sel. Topics Appl. Earth Observ. and Remote Sensing, 8(6): 2984-2996, 2015.

2013 IEEE GRSS Data Fusion Contest

The 2013 Contest involved two datasets, a hyperspectral image and a LiDAR-derived Digital Surface Model (DSM), both at the same spatial resolution (2.5m). The hyperspectral imagery has 144 spectral bands in the 380 nm to 1050 nm region. The dataset was acquired over the University of Houston campus and the neighboring urban area. A ground reference with 15 land use classes is available.

Paper: Debes, C.; Merentitis, A.; Heremans, R.; Hahn, J.; Frangiadakis, N.; van Kasteren, T.; Liao, W.; Bellens, R.; Pizurica, A.; Gautama, S.; Philips, W.; Prasad, S.; Du, Q.; Pacifici, F.: Hyperspectral and LiDAR Data Fusion: Outcome of the 2013 GRSS Data Fusion Contest. IEEE J. Sel. Topics Appl. Earth Observ. and Remote Sensing, 7 (6) pp. 2405-2418.

2012 IEEE GRSS Data Fusion Contest

The 2012 Contest was designed to investigate the potential of multi-modal/multi-temporal fusion of very high spatial resolution imagery in various remote sensing applications [6]. Three different types of data sets (optical, SAR, and LiDAR) over downtown San Francisco were made available by DigitalGlobe, Astrium Services, and the United States Geological Survey (USGS), including QuickBird, WorldView-2, TerraSAR-X, and LiDAR imagery. The image scenes covered a number of large buildings, skyscrapers, commercial and industrial structures, a mixture of community parks and private housing, and highways and bridges. Following the success of the multi-angular Data Fusion Contest in 2011, each participant was again required to submit a paper describing in detail the problem addressed, the method used, and final results generated for review.

Paper: Berger, C.; Voltersen, M.; Eckardt, R.; Eberle, J.; Heyer, T.; Salepci, N.; Hese, S.; Schmullius, C.; Tao, J.; Auer, S.; Bamler, R.; Ewald, K.; Gartley, M.; Jacobson, J.; Buswell, A.; Du, Q.; Pacifici, F., “Multi-Modal and Multi-Temporal Data Fusion: Outcome of the 2012 GRSS Data Fusion Contest”, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol.6, no.3, pp.1324-1340, June 2013.

2011 IEEE GRSS Data Fusion Contest

A set of WorldView-2 multi-angular images was provided by DigitalGlobe for the 2011 Contest. This unique set was composed of five Ortho Ready Standard multi-angular acquisitions, including both 16 bit panchromatic and multispectral 8-band images. The data were collected over Rio de Janeiro (Brazil) in January 2010 within a three-minute time frame with satellite elevation angles of 44.7°, 56.0°, and 81.4° in the forward direction, and 59.8° and 44.6° in the backward direction. Since there were a large variety of possible applications, each participant was allowed to decide a research topic to work on, exploring the most creative use of optical multi-angular information. At the end of the Contest, each participant was required to submit a paper describing in detail the problem addressed, the method used, and the final result generated. The papers submitted were automatically formatted to hide the names and affiliations of the authors to ensure neutrality and impartiality of the reviewing process.

Paper: F. Pacifici, Q. Du, “Foreword to the Special Issue on Optical Multiangular Data Exploitation and Outcome of the 2011 GRSS Data Fusion Contest”, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 5, no. 1, pp.3-7, February 2012.

2009-2010 IEEE GRSS Data Fusion Contest

In 2009-2010, the aim of the contest was to perform change detection using multi-temporal and multi-modal data. Two pairs of data sets were available over Gloucester, UK, before and after a flood event. The data set contained SPOT and ERS images (before and after the disaster). The optical and SAR images were provided by CNES. Similar to previous years’ Contests, the ground truth used to assess the results was not provided to the participants. Each set of results was tested and ranked a first-time using the Kappa coefficient. The best five results were used to perform decision fusion with majority voting. Then, re-ranking was carried out after evaluating the level of improvement with respect to the fusion results.

Paper: N. Longbotham, F. Pacifici, T. Glenn, A. Zare, M. Volpi, D. Tuia, E. Christophe, J. Michel, J. Inglada, J. Chanussot, Q. Du “Multi-modal Change Detection, Application to the Detection of Flooded Areas: Outcome of the 2009-2010 Data Fusion Contest”, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 5, no. 1, pp. 331-342, February 2012.

2008 IEEE GRSS Data Fusion Contest

The 2008 Contest was dedicated to the classification of very high spatial resolution (1.3 m) hyperspectral imagery. The task was again to obtain a classification map as accurate as possible with respect to the unknown (to the participants) ground reference. The data set was collected by the Reflective Optics System Imaging Spectrometer (ROSIS-03) optical sensor with 115 bands covering the 0.43-0.86 μm spectral range.

Paper: G. Licciardi, F. Pacifici, D. Tuia, S. Prasad, T. West, F. Giacco, J. Inglada, E. Christophe, J. Chanussot, P. Gamba, “Decision fusion for the classification of hyperspectral data: outcome of the 2008 GRS-S data fusion contest”, IEEE Transactions on Geoscience and Remote Sensing, vol. 47, no. 11, pp. 3857-3865, November 2009.

2007 IEEE GRSS Data Fusion Contest

In 2007, the Contest theme was urban mapping using synthetic aperture radar (SAR) and optical data, and 9 ERS amplitude data sets and 2 Landsat multi-spectral images were made available. The task was to obtain a classification map as accurate as possible with respect to the unknown (to the participants) ground reference, depicting land cover and land use patterns for the urban area under study.

Paper: F. Pacifici, F. Del Frate, W. J. Emery, P. Gamba, J. Chanussot, “Urban mapping using coarse SAR and optical data: outcome of the 2007 GRS-S data fusion contest”, IEEE Geoscience and Remote Sensing Letters, vol. 5, no. 3, pp. 331-335, July 2008.

2006 IEEE GRSS Data Fusion Contest

The focus of the 2006 Contest was on the fusion of multispectral and panchromatic images [1]. Six simulated Pleiades images were provided by the French National Space Agency (CNES). Each data set included a very high spatial resolution panchromatic image (0.80 m resolution) and its corresponding multi-spectral image (3.2 m resolution). A high spatial resolution multi-spectral image was available as ground reference, which was used by the organizing committee for evaluation but not distributed to the participants.

Paper: L. Alparone, L. Wald, J. Chanussot, C. Thomas, P. Gamba, L. M. Bruce, “Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data fusion contest”, IEEE Transactions on Geoscience and Remote Sensing, vol. 45, no. 10, pp. 3012–3021, Oct. 2007.

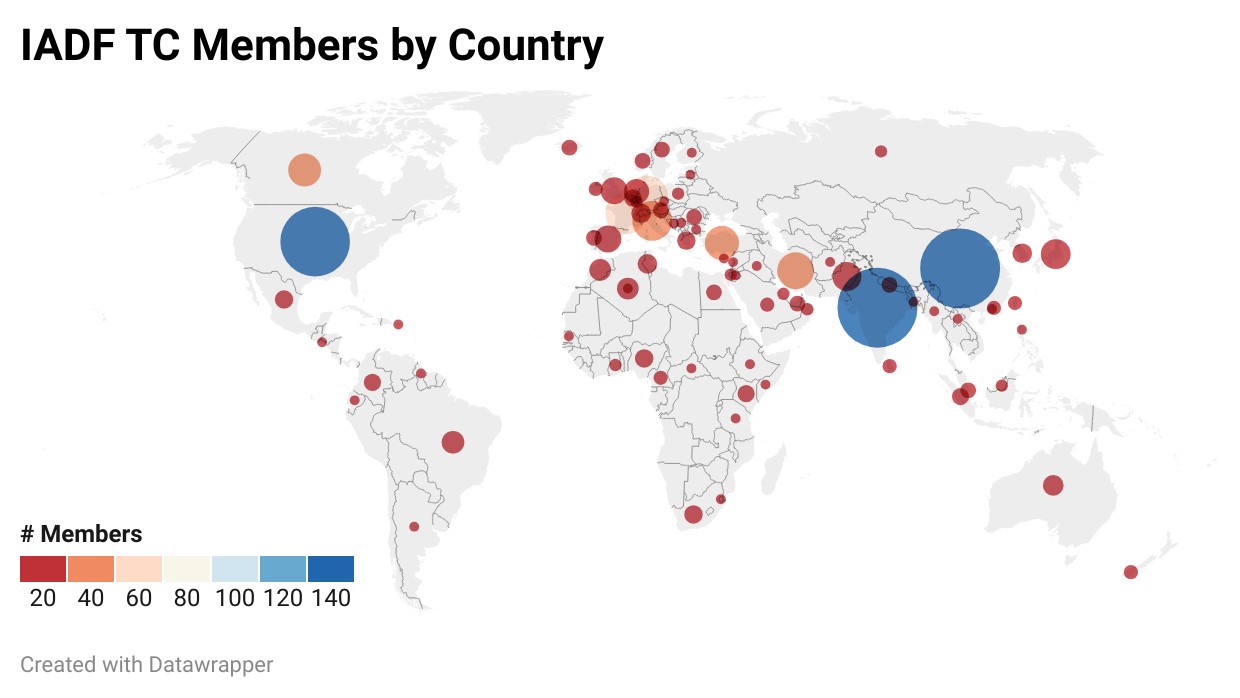

Current membership (as of January 2024)

Contact

The IADF TC is open for a wide range of people with different expertise and background and working in different application areas. We are happy if you:

- Provide feedback, suggestions, or ideas for future activities

- Propose input for next newsletter

- Propose the next Data Fusion Contest

- Propose a new IADF Working Group

You can engage with us by contacting the Committee Chairs by email, follow us on Twitter, join the LinkedIn IEEE GRSS Data Fusion Discussion Forum, or join the IADF TC!